Develop and Innovate with NVIDIA H200 SXM

Engineered to accelerate cutting-edge AI workflows and enhance HPC performance

with expanded memory capacity, now available on-demand through ECCENTRIC WORLD NV.

Exceptional Performance In...

Generative AI

Achieves up to twice the performance of the NVIDIA H100 for advanced model training and inference workloads.

LLM Inference

Delivers 2x performance boost compared to NVIDIA H100 when running large language models like Llama2 70B.

High-Performance Computing

Offers 110x faster MILC performance than dual x86 CPUs, making it ideal for memory-intensive computational tasks.

AI & Machine Learning

Features 3,958 TFLOPS of FP8 compute power to dramatically accelerate AI model training for exceptionally fast results.

Key Features of NVIDIA H200 SXM

Adaptable Configuration

Deploy our NVIDIA H200-141G-SXM5x8 setup combining eight powerful H200 SXM GPUs, perfect for enterprise-level generative AI, model training, and memory-intensive simulations.

Rapid Networking

Experience networking speeds up to 350 Gbps for low-latency, high-throughput tasks, ensuring fast data transfer and minimal latency for large-scale workloads.

Extensive NVMe Storage

Access 32 TB of ultra-fast ephemeral storage, ideal for high-velocity data processing, temporary caching, and managing substantial training datasets.

Substantial RAM Capacity

Utilize 1920 GB RAM for smooth operation of large AI models, dataset handling, and compute-intensive applications without memory limitations.

Snapshot Recovery

Create system snapshots to capture your H200 SXM VM's precise state, including configurations and bootable volumes for quick restoration and disaster recovery.

Dedicated Boot Volume

Every NVIDIA H200 SXM VM includes a 100 GB bootable volume, storing OS files and essential configurations for reliable system operation.

Technical Specifications

VM: NVIDIA H200 SXM

Generation: Latest 2024 Generation N3

Memory: 141GB HBM3e

| Flavor Name | Memory | GPU Count | CPU Cores | CPU Sockets | RAM (GB) | Root Disk (GB) | Ephemeral Disk (GB) | Region |

|---|---|---|---|---|---|---|---|---|

| n3-H200-141G-SXM5x8 | 141GB HBM3e | 8 | 192 | 2 | 1920 | 100 | 32000 | CANADA-1 |

Frequently Asked Questions

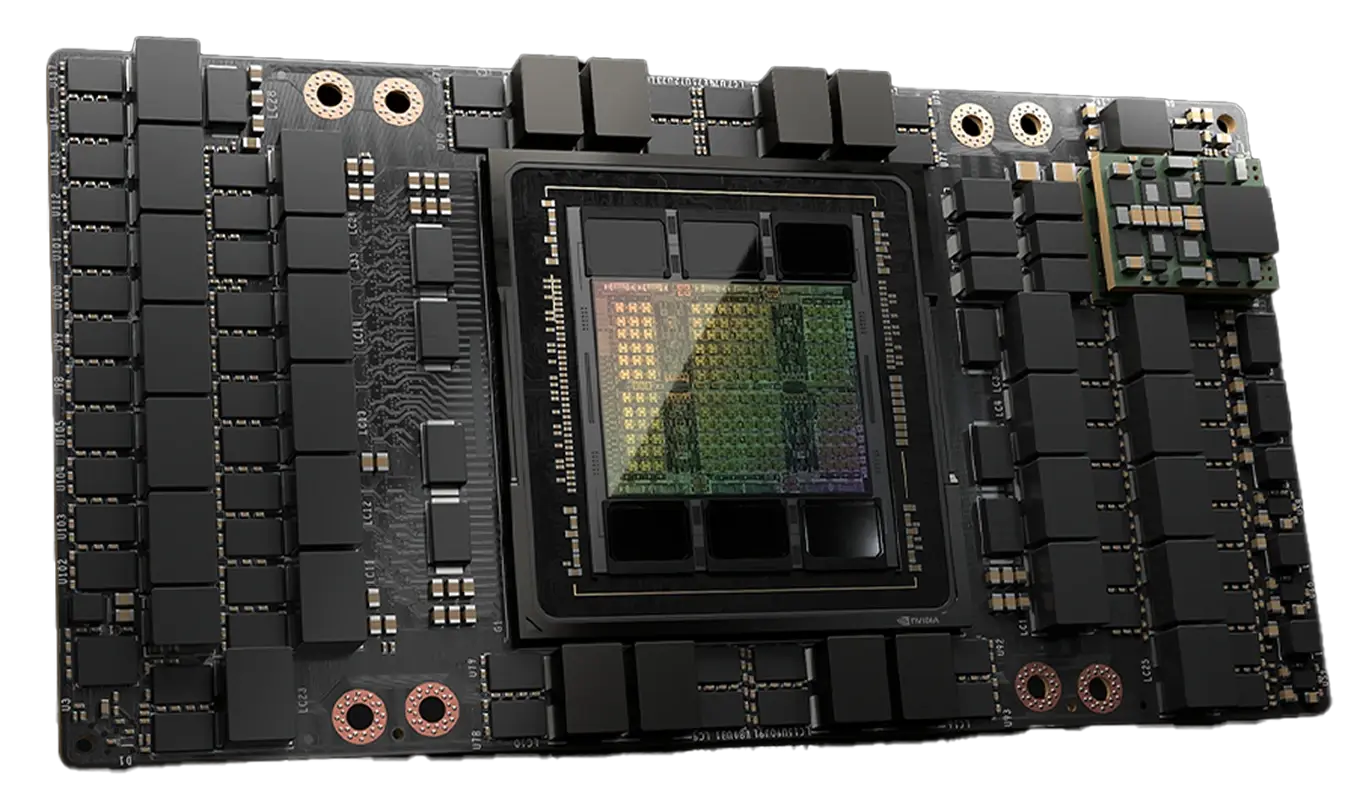

What exactly is the NVIDIA H200 SXM?

The NVIDIA H200 SXM represents NVIDIA's cutting-edge GPU technology built on the Hopper architecture. Specifically engineered to supercharge AI, HPC, and generative AI workloads, it delivers exceptional performance through advanced memory technology and increased bandwidth capabilities, making it perfectly suited for the most demanding large-scale computational tasks.

How can I start using the NVIDIA H200 SXM on ECCENTRIC WORLD NV?

Getting started is straightforward—simply log in to our platform to gain immediate access to the NVIDIA H200 SXM. Our streamlined deployment process lets you configure and launch your instances in minutes with no waitlists or quota restrictions.

What memory specifications does the NVIDIA H200 SXM offer?

The NVIDIA H200 SXM comes equipped with an impressive 141GB of HBM3e memory, providing extraordinary capacity for handling memory-intensive AI models and processing extensive datasets with exceptional efficiency.

Is the NVIDIA H200 SXM appropriate for generative AI development?

The NVIDIA H200 SXM is exceptionally well-suited for generative AI applications. By delivering up to double the performance of previous generation GPUs, it significantly accelerates model training and inference processes, particularly when working with sophisticated large-scale models such as GPT variants and Llama2.

What system RAM is available with NVIDIA H200 SXM configurations?

Our NVIDIA H200 SXM setups feature a substantial 1,920 GB of system RAM, ensuring ample memory for handling complex computational workflows and preventing performance bottlenecks even during the most resource-intensive operations.

What is the power profile of the NVIDIA H200 SXM?

The NVIDIA H200 SXM operates with a TDP of up to 700W, efficiently delivering extraordinary computational performance for the most demanding enterprise-grade AI and HPC applications while maintaining optimal energy efficiency.

Accessible

Deploy powerful H200 SXM GPUs on demand with no waitlists or capacity constraints.

Affordable

Access cutting-edge GPU technology at competitive rates with transparent pricing.

Efficient

Maximize your investment with high-performance configurations optimized for AI and HPC workloads.