Power Your Innovation with NVIDIA H100 SXM

Built for superior AI workloads and high-performance computing applications

with advanced memory technology, available on-demand through ECCENTRIC WORLD NV.

Exceptional Performance In...

AI Training & Inference

Delivers 30x faster inference speed and 9x faster training speed compared to previous generation GPUs.

LLM Performance

Achieves 30x faster processing for large language models, dramatically enhancing model responsiveness and capabilities.

Single Cluster Scalability

Deploy from 8 to 16,384 cards in a single cluster, offering unprecedented computational power for enterprise-level workloads.

Advanced Connectivity

Features 3.2 Tbps connectivity, enabling optimized CPU, GPU, and network capabilities without performance limitations.

Key Features of NVIDIA H100 SXM

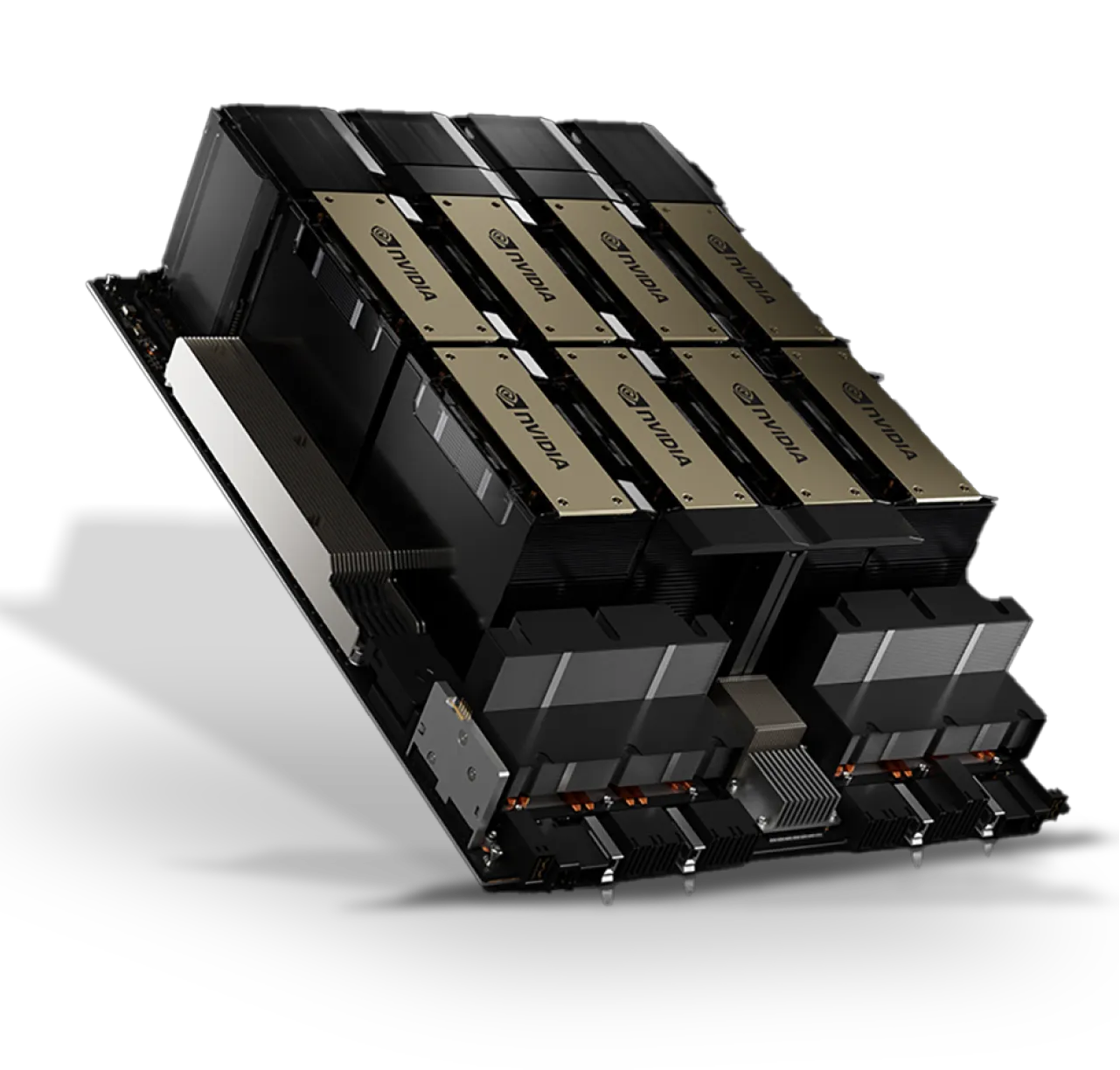

SXM Form Factor

Benefit from high-density GPU configurations, efficient cooling, and optimized energy consumption with the superior SXM form factor design.

DGX Reference Architecture

Built on NVIDIA DGX reference architecture, ensuring seamless integration into enterprise ecosystems with proven reliability for AI applications.

Scalable Design

Deploy with flexible configurations through a modular architecture that adapts to evolving computational requirements and growing workloads.

Enhanced TDP

Access up to 700W TDP for maximum computational performance, ideal for the most intensive AI and high-performance computing applications.

NVLink & NVSwitch

Experience significantly higher interconnect bandwidth with advanced NVLink and NVSwitch technologies, surpassing traditional PCIe connections.

GPU Direct Technology

Enhance data movement efficiency with direct GPU memory access, eliminating unnecessary copies, reducing CPU overhead, and minimizing latency.

Technical Specifications

VM: NVIDIA H100 SXM

Generation: Latest Hopper Architecture

Memory: 80GB HBM2e

| Specification | NVIDIA H100 SXM |

|---|---|

| Form Factor | 8x NVIDIA H100 SXM |

| FP64 | 34 teraFLOPS |

| FP64 Tensor Core | 67 teraFLOPS |

| FP32 | 67 teraFLOPS |

| TF32 Tensor Core | 989 teraFLOPS |

| FP16 Tensor Core | 1,979 teraFLOPS |

| FP8 Tensor Core | 3,958 teraFLOPS |

| INT8 Tensor Core | 3,958 TOPS |

| GPU Memory | 80GB |

| GPU Memory Bandwidth | 3.35TB/s |

| Connectivity | 3.2 Tbps |

| Max TDP | Up to 700W |

| Interconnect | NVLink: 900GB/s, PCIe Gen5: 128GB/s |

Frequently Asked Questions

What is the NVIDIA H100 SXM?

The NVIDIA H100 SXM is a powerful GPU designed specifically for data centers, optimized for demanding workloads including AI development, scientific simulations, and large-scale data analytics, featuring advanced performance capabilities with the Hopper architecture.

What's the difference between NVIDIA's H100 SXM and PCIe models?

While both are powerful GPUs, the H100 SXM offers superior connectivity and performance compared to the PCIe variant. The SXM model features double the memory capacity, faster data transfer rates, and a higher power limit for extreme computational tasks.

How much faster is the NVIDIA H100 than previous generation GPUs?

The NVIDIA H100 delivers 30x faster inference speed and 9x faster training performance compared to the previous generation A100, representing a significant advancement in GPU technology for AI and machine learning applications.

What is the TDP of the NVIDIA H100 SXM?

The NVIDIA H100 SXM operates with a Thermal Design Power (TDP) of up to 700W, allowing it to deliver exceptional computational performance for the most demanding enterprise-grade AI and HPC applications.

How can I get started with the NVIDIA H100 SXM on ECCENTRIC WORLD NV?

Starting with the NVIDIA H100 SXM is straightforward—simply create an account or log in to our platform to gain immediate access. Our user-friendly interface allows you to configure and launch your instances quickly with no waiting periods.

What GPU memory does the NVIDIA H100 SXM provide?

The NVIDIA H100 SXM comes equipped with 80GB of high-bandwidth HBM2e memory, providing substantial capacity for handling complex AI models and processing large datasets with exceptional efficiency.

On-Demand

Access powerful H100 SXM GPUs immediately with no waitlists or capacity restrictions.

Cost-Effective

Utilize high-performance GPU technology with transparent, competitive pricing and reservation options.

Scalable

Scale your computational resources seamlessly from 8 to thousands of GPUs as your projects grow.